©2015 -

Information Technology

Oracle Grid Cluster Installation With NFS -

So far, what we had prepared for the Oracle Grid cluster installation are:

- The required network setup

- Public node VIPs

- Private IP configuration for cluster interconnect

- SCAN IP configured at host file

- Setup of OS Group and the Grid user (oragrid)

- The NFS repository for the OCR data and Vote data.

- The SSH key authentication for the Grid user.

Now, it is time to run the cluster verify script (runcluvfy.sh) to validate all our prep work.

oragrid@s11node1:~/install/grid$ ls

install response rpm runInstaller stage

readme.html rootpre.sh runcluvfy.sh sshsetup welcome.html

oragrid@s11node1:~/install/grid$

./runcluvfy.sh stage -

oragrid@s11node1:~/install/grid$ ./runcluvfy.sh stage -

Performing pre-

Checking node reachability...

Node reachability check passed from node "s11node1"

Checking user equivalence...

User equivalence check passed for user "oragrid"

Checking node connectivity...

Checking hosts config file...

Verification of the hosts config file successful

Node connectivity passed for subnet "172.16.33.0" with node(s) s11node2,s11node1

TCP connectivity check passed for subnet "172.16.33.0"

Node connectivity passed for subnet "192.168.65.0" with node(s) s11node2,s11node1

TCP connectivity check passed for subnet "192.168.65.0"

Interfaces found on subnet "172.16.33.0" that are likely candidates for VIP are:

s11node2 net0:172.16.33.121

s11node1 net0:172.16.33.120 net0:172.16.33.99

Interfaces found on subnet "192.168.65.0" that are likely candidates for a private interconnect are:

s11node2 net1:192.168.65.112

s11node1 net1:192.168.65.111

Checking subnet mask consistency...

Subnet mask consistency check passed for subnet "172.16.33.0".

Subnet mask consistency check passed for subnet "192.168.65.0".

Subnet mask consistency check passed.

Node connectivity check passed

Checking multicast communication...

Checking subnet "172.16.33.0" for multicast communication with multicast group "230.0.1.0"...

Check of subnet "172.16.33.0" for multicast communication with multicast group "230.0.1.0" passed.

Checking subnet "192.168.65.0" for multicast communication with multicast group "230.0.1.0"...

Check of subnet "192.168.65.0" for multicast communication with multicast group "230.0.1.0" passed.

Check of multicast communication passed.

Total memory check passed

Available memory check passed

Swap space check failed

Check failed on nodes:

s11node2,s11node1

Free disk space check passed for "s11node2:/var/tmp/"

Free disk space check passed for "s11node1:/var/tmp/"

Check for multiple users with UID value 1003 passed

User existence check passed for "oragrid"

Group existence check failed for "oinstall"

Check failed on nodes:

s11node2,s11node1

Group existence check failed for "dba"

Check failed on nodes:

s11node2,s11node1

Membership check for user "oragrid" in group "oinstall" [as Primary] failed

Check failed on nodes:

s11node2,s11node1

Membership check for user "oragrid" in group "dba" failed

Check failed on nodes:

s11node2,s11node1

Run level check passed

Hard limits check passed for "maximum open file descriptors"

Soft limits check passed for "maximum open file descriptors"

Hard limits check passed for "maximum user processes"

Soft limits check passed for "maximum user processes"

System architecture check passed

Kernel version check passed

Kernel parameter check passed for "project.max-

Check for multiple users with UID value 0 passed

Current group ID check passed

Starting check for consistency of primary group of root user

Check for consistency of root user's primary group passed

Starting Clock synchronization checks using Network Time Protocol(NTP)...

NTP Configuration file check started...

No NTP Daemons or Services were found to be running

Clock synchronization check using Network Time Protocol(NTP) passed

Core file name pattern consistency check passed.

User "oragrid" is not part of "root" group. Check passed

Default user file creation mask check passed

Checking consistency of file "/etc/resolv.conf" across nodes

File "/etc/resolv.conf" does not have both domain and search entries defined

domain entry in file "/etc/resolv.conf" is consistent across nodes

All nodes have one domain entry defined in file "/etc/resolv.conf"

Checking all nodes to make sure that domain is "vlabs.net private.net" as found on node "s11node2"

All nodes of the cluster have same value for 'domain'

search entry in file "/etc/resolv.conf" is consistent across nodes

The DNS response time for an unreachable node is within acceptable limit on all nodes

File "/etc/resolv.conf" is consistent across nodes

Time zone consistency check passed

Pre-

oragrid@s11node1:~/install/grid$

NOTE: At this point you would notice that there was a failure on group check which is ok since I named the OS groups different from the default. The swap space warning can also be ignored.

If you are using an SSH terminal within a Microsoft Windows environment connecting to the primary node s11node1, make sure that you have an X11 forwarding enabled to display the Unix X Windows GUI. Details to set this up is found on my article -

Now, let’s run the 11g Grid installer and the Download Software window should appear.

oragrid@s11node1:~/install/grid$ ./runInstaller

Starting Oracle Universal Installer...

Checking Temp space: must be greater than 180 MB. Actual 4839 MB Passed

Checking swap space: must be greater than 150 MB. Actual 5500 MB Passed

Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed

Preparing to launch Oracle Universal Installer from /tmp/OraInstall2015-

oragrid@s11node1:~/install/grid$

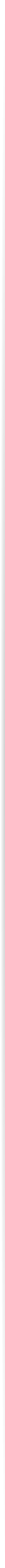

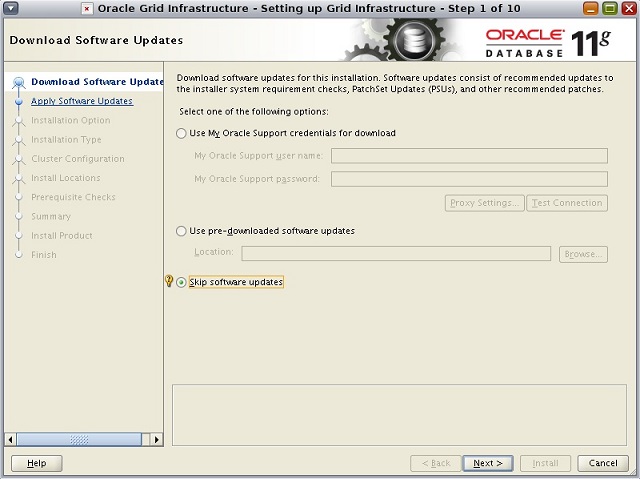

Step 1. Skip software updates.

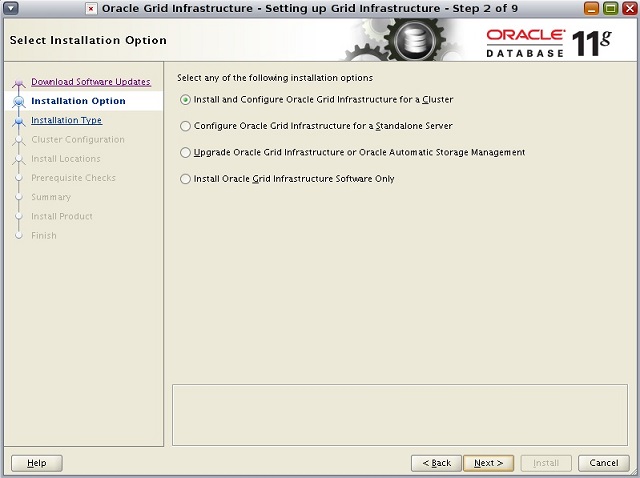

Step 2. Install and configure Oracle Grid Infrastructure for a Cluster

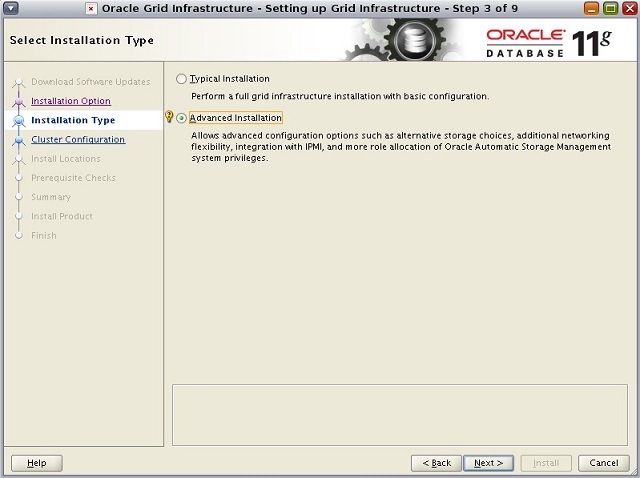

Step 3. Installation Type: Advanced Installation

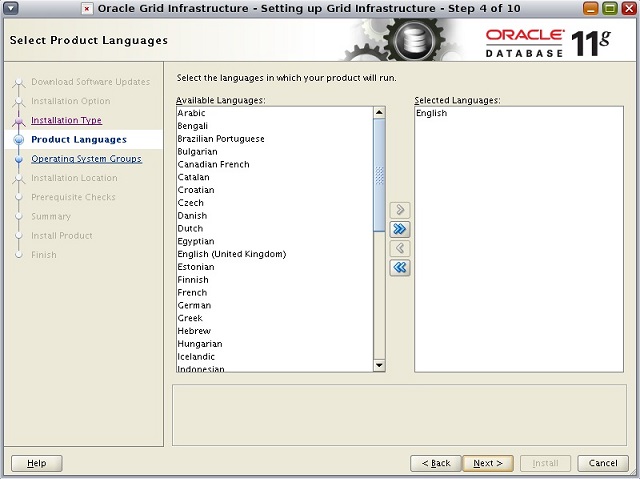

Step 4. Select "English" Language

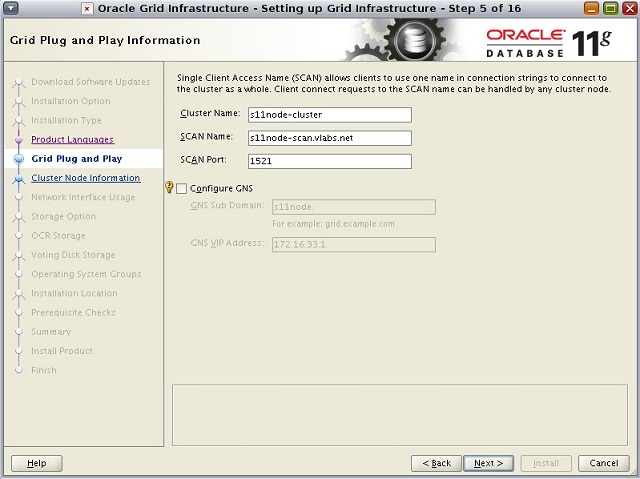

Step 5. Enter Cluster name and SCAN properties.

Uncheck -

Cluster Name: s11node-

SCAN Name: s11node-

SCAN Port: 1521

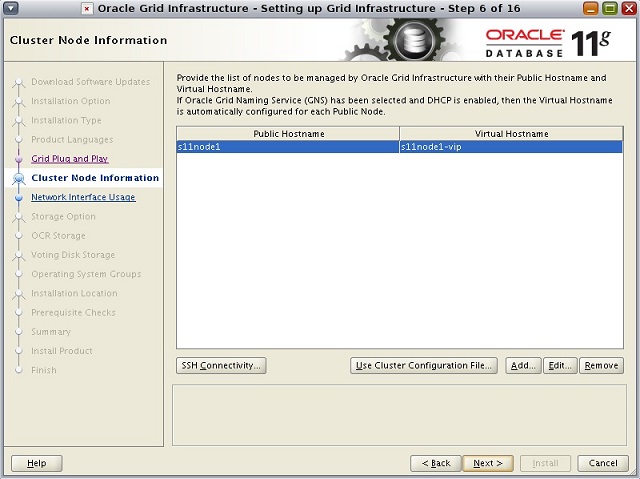

Step 6. Provide additional node members for the cluster by clicking the ADD button.

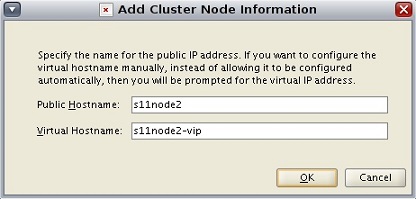

Step 7. Enter the second node hostname properties.

Public Hostname: s11node2

Virtual Hostname: s11node2-